Large Language Models are hot stuff at present. Using them, and integrating them into workflows, is still an open-area of investigation for many people and organisations.

I'm currently using VSCode, the Continue 'open-source autopilot' extension, and Ollama.

This flow mirrors most of what Github Copilot can do, but is faster, cheaper, and more private.

Continue

Continue is a VSCode extension that provides a 'coding autopilot' - it's a little like Github Copilot, but it's open-source, and you can plug models into it. By default it uses OpenAI's GPTs.

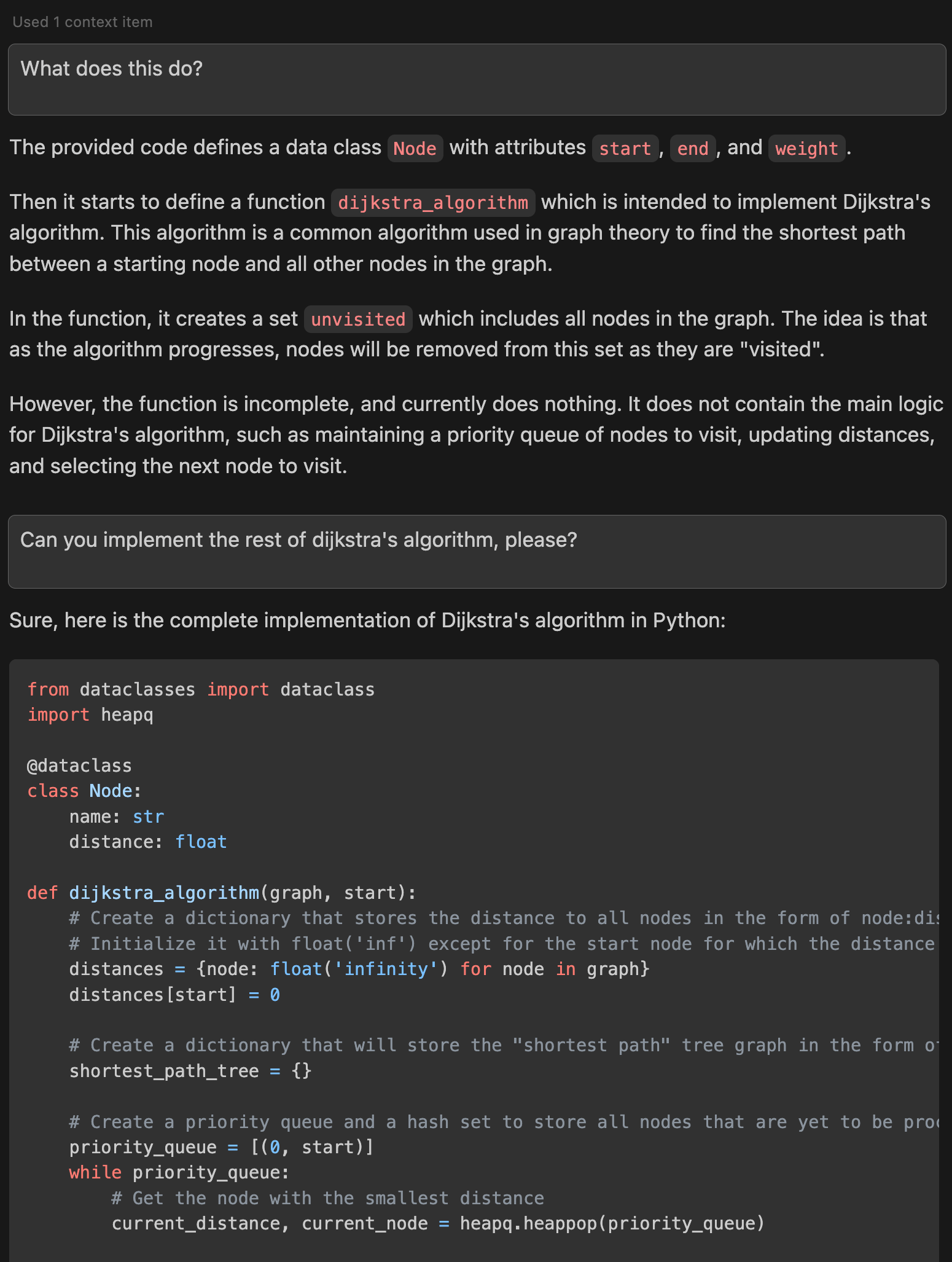

As an example, I started writing a quick snippet of graph-traversal code, and then got Continue to help.

@dataclass

class Node:

start: int

end: int

weight: int

def dijkstra_algorithm(graph, start: Node) -> (dict, dict):

unvisited = set(graph.nodes)

I highlighted this code, and then invoked Continue with Cmd-M (on a Mac) and then asked the LLM what the code did, and if it could write some more.

... the output is truncated, since its quite long.

Ollama

The Continue extension is great, but it defaults to OpenAI's GPT models. OpenAI's ChatGPT is not open-source, free, or private. If you require any of those things, then you need to use something else.

Meta's "Llama" LLM was leaked online earlier this year, and the open-source community pounced upon it. One of the most interesting pieces of work is llama.cpp which is a port of the LLM to C++. It can be run on a CPU, instead of a GPU (and has great support for Mac Silicon).

Ollama takes this idea, and makes it super-easy to install and run any model.

On a Mac, to run the Mistral-7b model, it's as simple as..

brew install ollama

ollama run mistral

After downloading the model you then receive a prompt, and can type in the terminal:

>>> are you an AI?

Yes, I am an artificial intelligence. I was created to assist with information and answer questions

to the best of my ability. How may I help you today?

There is a project to run a Web UI for Ollama, if you don't like the terminal as much as I do.

Ollama + Continue

Continue has a json config file (usually in .continue/config.json), listing the models it can use (and a quick switcher to change between them). It's easy to add models to this list.

The defaults are OpenAI's GPTs, but you can add Ollama-backed ones too, following this pattern:

{

"title": "Mistral",

"model": "mistral-7b",

"apiBase": "http://localhost:11434",

"provider": "ollama"

}

Once setup, you can then use Continue with an Ollama-backed model, and get a private, local, free, and open-source LLM to help you code.